Get 95 %-plus accuracy on complex reasoning & multimodal data.

Launch in 14 days. No lock-in.

Accelerate your LLM reasoning and coding capabilities with high-quality, proprietary human data for supervised fine-tuning (SFT), reinforcement learning from human feedback (RLHF), and direct preference optimization (DPO).

| Leading Providers are Great At | Where Ascentt Complements |

|---|---|

| Massive, eight-figure volume projects | Projects from 50k to 5M labels—fast, flexible |

| Accuracy ≥ 95% (black-box QC) | Same 95% target plus real-time QA dashboard & per-error rebates |

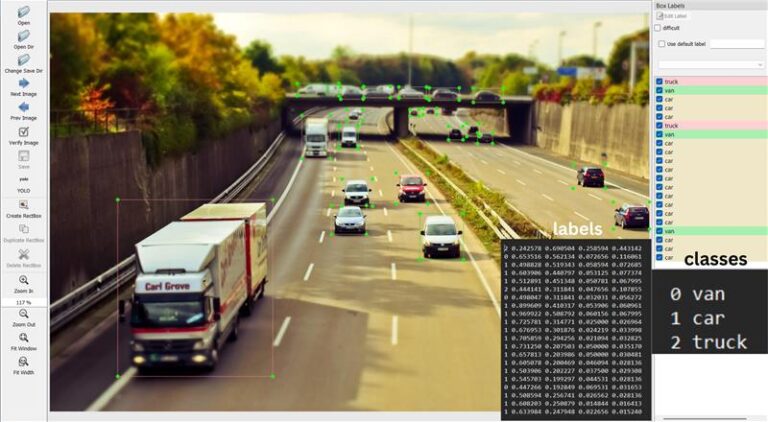

| RLHF pipelines for frontier labs | Reasoning Annotation Pods— step-by-step chain-of-thought on text, images, video |

Domain-experts + prompt engineers craft chain-of-thought labels your LLM can mimic—no hallucination guessing.

Monthly, cancel-anytime. Spin up a parallel pipeline in < 2 weeks to de-risk single-vendor dependence.

Pay for validated accuracy, not for head-count. Live dashboards, exportable audits.

Need FedRAMP, HIPAA, or GDPR + local hosting? We deploy air-gapped pods behind your firewall.

From spec design to SDK hand-off—our solution architects sit with your ML team, compressing iteration loops by 35 % (median).

Engineered for the complex demands of global enterprises: merging cutting-edge Al with governance precision,

operational resilience, and uncompromising accountability.

Intuitively designed to align effortlessly within your existing workflows & environment.

Proven track record of delivering Mn$ value to our enterprise customers.

Extensive experience with AI/ML technologies, long before AI became mainstream.